How We Upgraded GymBeam’s Infrastructure to the Ultra-Powerful EPYC Servers

How and why we improved GymBeam’s infrastructure, what’s so great about EPYC servers, and who will benefit most from this amazing hardware.

We made major upgrades to the infrastructure of one of the biggest e-commerce projects in the Czech Republic and Slovakia: GymBeam. And they’re not just some minor improvements – we exchanged all the hardware in the application part of their cluster and installed the extra powerful servers (8 of those bad boys in total).

How did the installation go, what does it mean for GymBeam, which advantages do EPYC servers provide, and should you be thinking of this upgrade yourself? You’ll find out all that and more in this article.

What’s so epic about EPYC servers?

Until recently, we’ve been focusing on Intel Xeon processors at vshosting~. These have been dominating (not only) the server product market for many years. In the last couple of years, however, the significant improvement in portfolio and manufacturing technologies of the AMD (Advanced Micro Devices) company caught our attention.

This company newly offers processors that offer a better price/performance ratio, a higher number of cores per CPU, and better energy management (among other things thanks to a different manufacturing technology – AMD Zen 2 7nm vs. Intel Xeon Scalable 14nm). These processors are installed in the AMD EPYC servers we have used for the new GymBeam infrastructure.

They are the most modern servers with record-breaking processors with up to 68 cores and 128 threads (!!!). Compared to the standard Intel Xeon Scalable, where we offer processors with a maximum of 28 cores per CPU, the volume of computing cores is more than double.

The EPYC server processors are manufactured using the 7 nm process and the multiple-chipsets-per-case method, which allows for all 64 cores to be packed into a single CPU and ensure a truly noteworthy performance.

How did the installation go

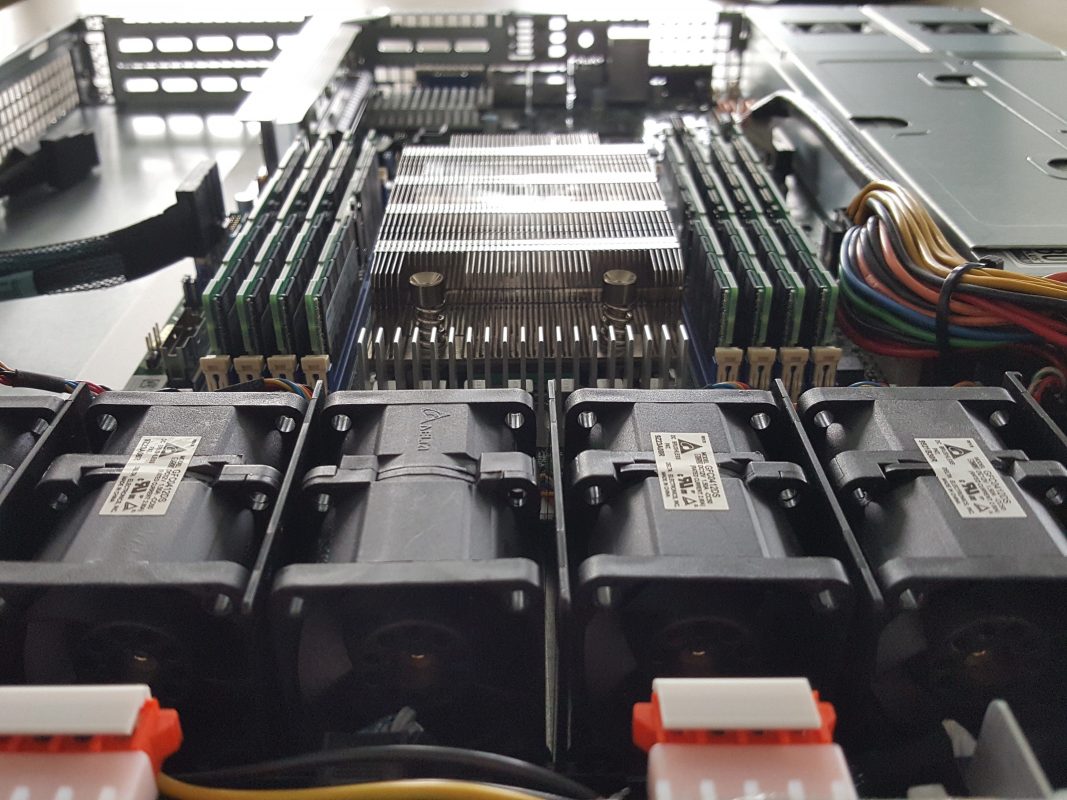

The installation of the first servers based on this new platform went flawlessly. Our first step was a careful selection of components and platform unification for all of the future installations. The most important part at the very beginning was choosing the best possible architecture of the platform together with our suppliers and specialists. This included choosing the best chassis, server board, peripherals including the more powerful 20k RPM ventilators for sufficient cooling, etc. We will apply this setup going forward on all future AMD EPYC installations. We were determined for the new platform to reflect the high standard of our other realizations – no room for compromise.

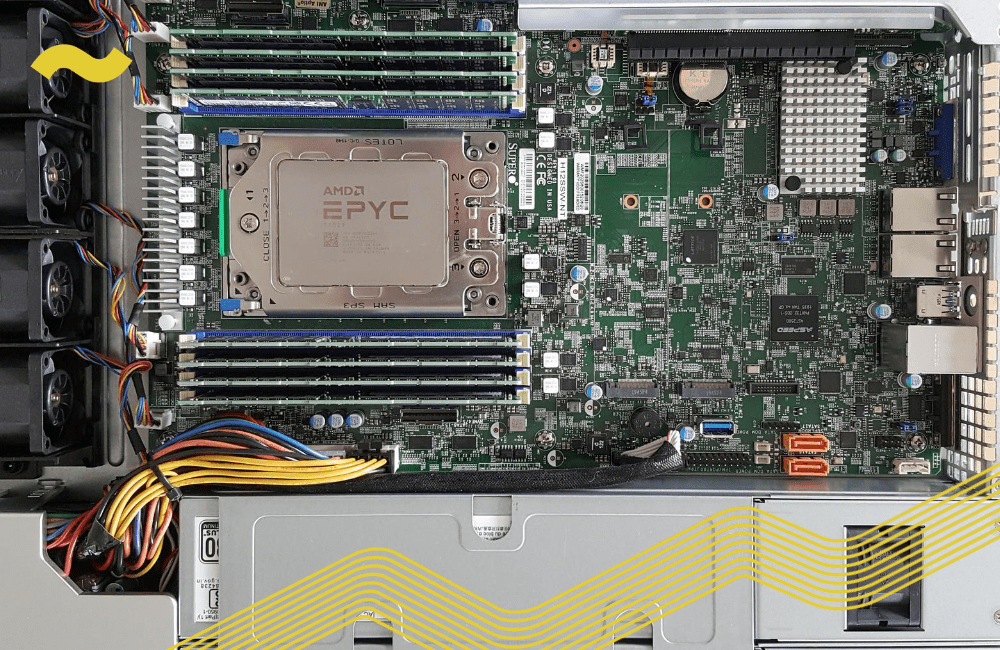

As a result, the AMD EPYC servers joined our “fleet” without a hitch. The servers are based on the chassis and motherboards from the manufacturer SuperMicro and we can offer both 1Gbps and 10Gbps connection and connection of hard disks both on-board and with the help of a physical RAID controller according to the customer’s preferences. We continue to apply hard drives from our offer, namely the SATA3 // SAS2 or PCI-e NVMe. Read more about the differences between SATA and NVMe disks.

Because this is a new platform for use, we have of course stocked our warehouse with SPARE equipment and are ready to use it immediately should there be any issue in production.

Advantages of the hardware for GymBeam’s business

The difference compared to the previous processors from Intel is huge: besides the larger number of cores, even the computing power per core is higher. Another performance increase is caused by turning on the Hyperthreading technology. We turn this off in case of the Intel processors due to security reasons but in case of the AMD EPYC processors, there’s no reason to do so (as of yet anyway).

The result of the overall increase in performance is, firstly, a significant acceleration in web loading due to higher performance per core. This is especially welcomed by GymBeam customers, for whom shopping in the online store has now become even more pleasant. Speeding up the web will also improve SEO and raise search engine “karma” overall.

In addition to faster loading, GymBeam gained a large performance reserve for its marketing campaigns. The new infrastructure can handle even a several-fold increase in traffic in the case of intensive advertising.

Last but not least, at GymBeam they can now be sure they are running on the best hardware available 🙂

Would you benefit from upgrading to the EPYC servers?

Did the mega-powerful EPYC processors catch your interest and you are now considering whether they would pay off in your case? When it comes to optimizing your price/performance ratio, the number one question is how powerful an infrastructure your project needs.

It makes sense to consider AMD EPYC processors in a situation where your existing processors are running out of breath and upgrading to a higher Intel Xeon line would not make economic sense. That limit is currently at about 2x 14core – 2x 16core. Intel’s price above this performance is disproportionately high at the moment.

Of course, the reason for the upgrade does not have to be purely technical or economic – the feeling that you run services on the fastest and best the market has to offer, of course, also has its value.