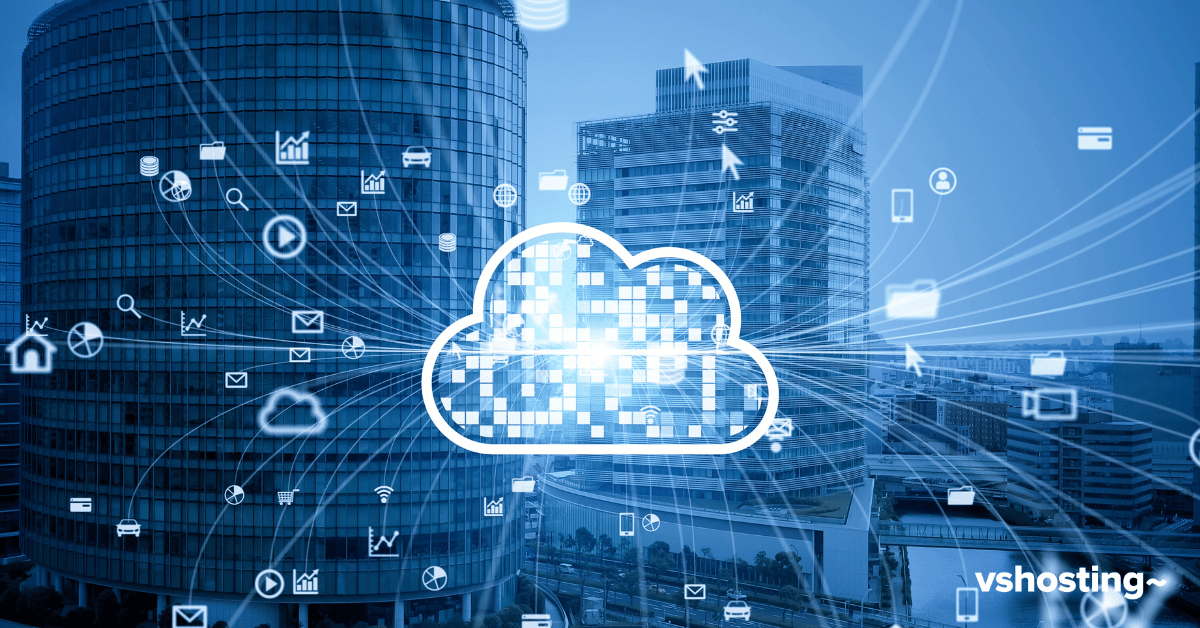

Conquering the cloud: Should you do it in-house or use a partner?

The public cloud beckons businesses of all sizes with its scalability, flexibility, and cost-efficiency. But the migration process is complex and requires careful consideration. As public cloud spending continues to rise, businesses may find themselves at a crucial juncture, wondering if it is best to navigate this migration alone, or work with a cloud service provider (CSP).

The answer unfortunately, depends. There is no one-size-fits-all solution, and the optimal approach would depend on your organisation’s unique circumstances. Before embarking on this journey, you should carefully evaluate your internal capabilities, requirements, and desired outcomes. To facilitate the process, below are some questions to guide you:

1. How critical is it to maintain complete control over your data?

2. Are you using a simple cloud setup or a complex multi-cloud environment?

3. What is your budget?

4. Do you have a team with cloud expertise and bandwidth to manage the migration and ongoing operations?

5. Is your cloud migration a straightforward resource transfer, or does it involve intricate configurations and ongoing optimisation needs?

6. How will a partner add value to the process for your organisation?

Now that we have given these questions some thought, let us look at the benefits and disadvantages of both approaches.

Steering the ship yourself

Taking the reins and managing your cloud environment internally offers an unparalleled sense of control, potentially reducing upfront cost. You get to dictate every aspect of your cloud setup, ensuring it aligns perfectly with your specific needs and existing security protocols.

However, venturing solo comes with its own set of challenges.

Successful cloud migration demands a team well-versed in cloud solutions, security best practices, and ongoing optimisation strategies. Building and maintaining this expertise internally can be a significant investment if you do not already have a team to start with. Consider this as well – your existing IT team will bear the additional responsibility of managing the cloud environment, potentially leading to increased workload and delays in core IT operations. Staying updated with the evolving cloud landscape also requires continuous learning and adaptation. Is this something you can afford at this stage?

The power of partnership

On the flipside, partnering with a cloud or managed service provider allows them to bring a wealth of knowledge and experience to the table. These providers possess the expertise to expedite the migration process, leveraging their established tools and methodologies. Their experience allows them to design a cloud environment tailored to your specific needs and workloads, often leading to improved outcomes and cost-effectiveness. Additionally, you also gain access to a team of cloud specialists who can guide you through the complexities of security, compliance, and ongoing management.

The additional factors to consider

Partnering with a cloud or managed service provider is a compelling option but comes with other implications. Firstly, partnering typically involves additional service fees, which can impact the organisation’s overall IT budget. These fees need to be carefully considered and factored into your financial planning to ensure alignment with any budgetary constraints.

Additionally, partnering means entrusting a certain degree of control over your cloud environment to the provider. This shift in control requires open communication and clearly defined Service Level Agreements (SLAs), outlining the roles, responsibilities, and expectations of both parties to ensure transparency and accountability.

A Hybrid Approach

If you desire the best of both worlds, you can also consider a hybrid approach. This approach leverages internal expertise for basic functionalities while engaging a partner for complex aspects or ongoing management. This way, your internal team can gain valuable cloud knowledge while collaborating with the partner. You also get to focus your resources on core functionalities while outsourcing specialised tasks that require deeper expertise.

Partnering with an MSP can be advantageous, but internal capabilities should not be overlooked. Building internal expertise alongside a well-defined partnership with an MSP can provide that win-win future-proof solution for all.

If you are still uncertain about the best approach, you might want to consider seeking independent advice from cloud specialists. These experts can assess your specific needs and recommend the most suitable strategy, whether it involves internal management, partnering with an MSP, or adopting a hybrid solution. Regardless of the chosen path, you should always ensure open communication and clear expectations with all stakeholders involved.

If you are interested in further discussing your specific circumstances with an expert, please do not hesitate to contact us.